Using Dependency Tracking to Provide Explanations for Policy Management

Lalana Kagal

Decentralized Information Group

MIT Computer Science and Artificial Intelligence Laboratory

Talk Outline

- Why do we need explanations ?

- AIR Policy Language

- Explanations in AIR

- Dependency tracking

- Explanation generation

- Automatic explanations

- Customizing explanations

- Problems & Future Work

Importance of Explanations

- Explanations for policy decisions allow users to understand how the results were obtained

- Increase trust in the policy reasoning and enforcement process

- Used by policy administrators to confirm the correctness of the policy and to check that the result is as expected

- In the case of failed queries, they can be used to figure out what additional information is required for success

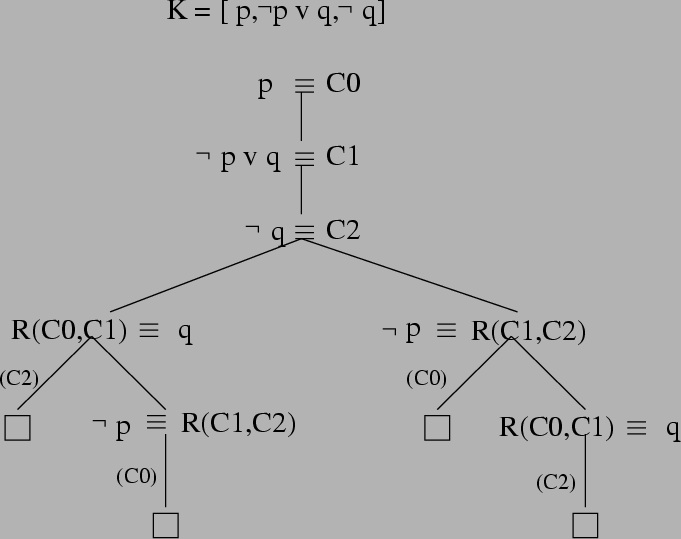

Why aren't proofs enough ?

|

Image courtesy http://clip.dia.fi.upm.es/~logalg/slides/ |

AIR Policy Language

- rule-based policy language for accountability and access control

- explanations for policy decisions through dependency tracking

- customizable explanations, if required

- more efficient and expressive reasoning through the use

of goal direction

- mainly forward chaining

- backward chaining is used to limit search space

- grounded in Semantic Web technologies for greater interoperability, reusability, and extensibility

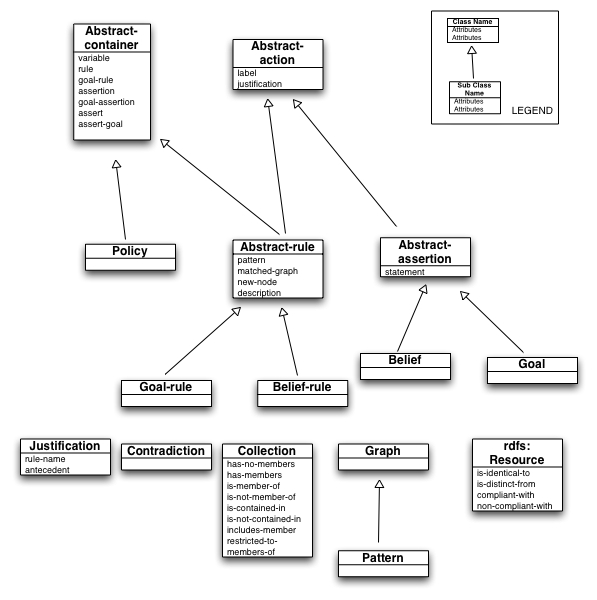

Dependency Tracking

|

AIR specifications

:Policy1 a air:Policy;

air:rule [

air:pattern { ... };

air:assert { ... };

air:rule [ ... ]

].

|

|

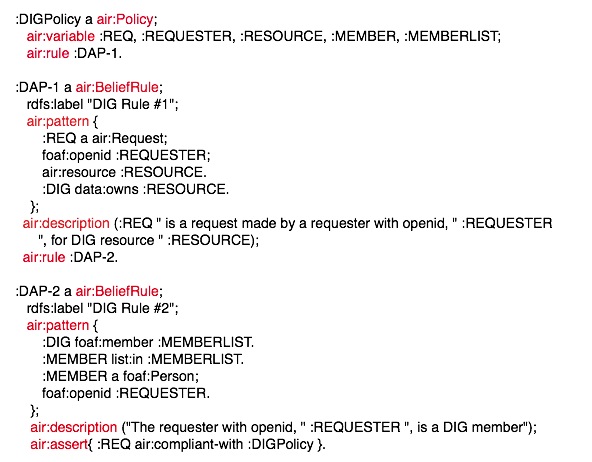

AIR specifications

- Variables (air:variable)

- used to declare variables that can be used inside patterns

- the scope of variable is the container in which it is declared. If the variable is bound before a rule is invoked, it is passed as a constant

- Rule descriptions (air:description)

- list of variables and strings that are put together to provide the NL description

:Policy2 a air:Policy;

air:variable :VAR1;

air:rule [

air:variable :VAR2;

air:pattern { ... };

air:assertion { ... };

air:description (:VAR1, “ is a variable that is declared in :Policy2 and ”, :VAR2,

“ is a variable that is declared in this rule”);

air:rule [ ... ]

.

AIR Tools

- AIR Reasoner

- accepts data + policy

- produces reasoning result of running policy over data

- Available via http

http://mr-burns.w3.org/cgi-bin/server_cgi.py?logFile="log file"&rulesFile="policy file"

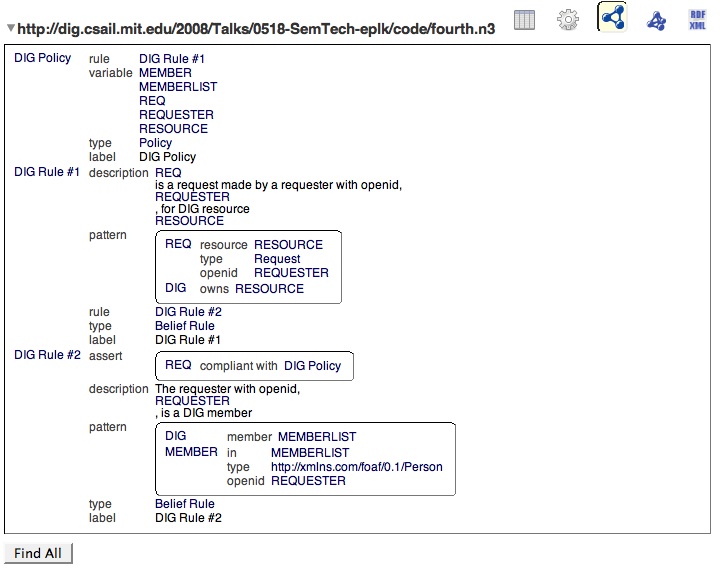

- Justification UI

- accepts reasoning results

- Textual display

- Allows exploration of graphical display

- Firefox Extension: http://dig.csail.mit.edu/2007/tab/

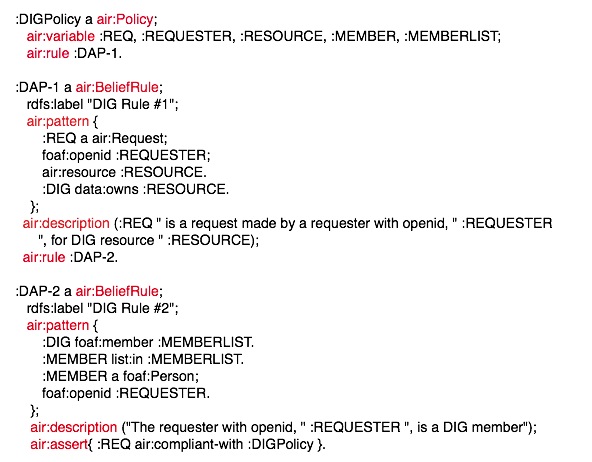

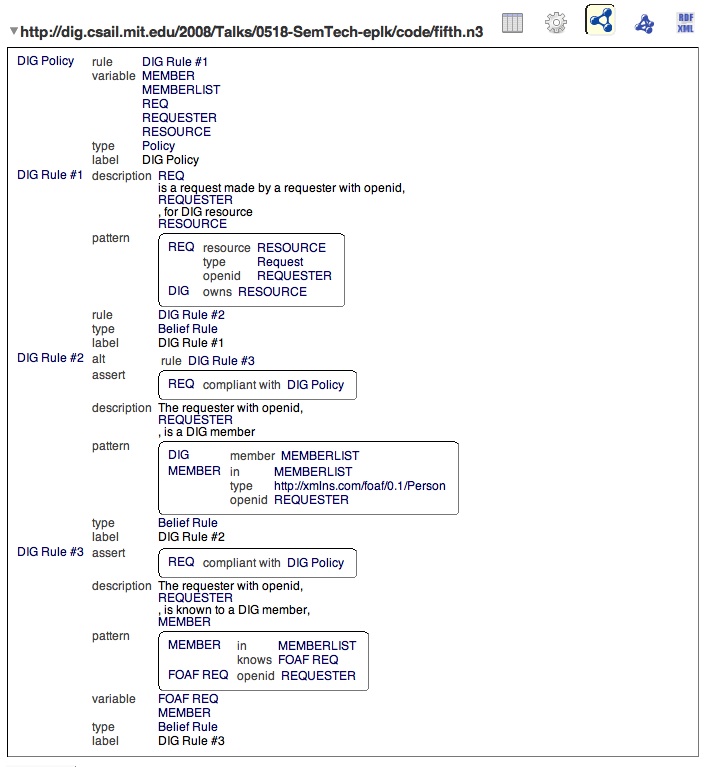

Simple AIR Example

All DIG members are allowed to view resources owned by the DIG group

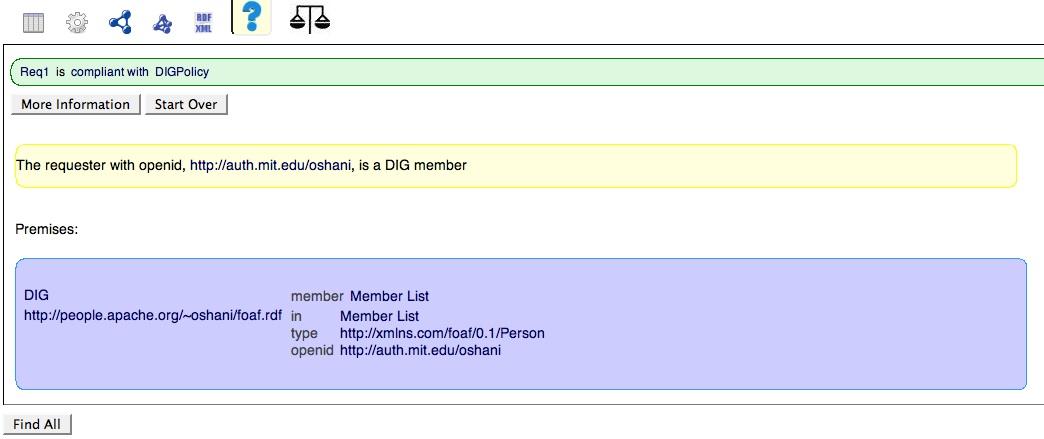

Viewing Policy in UI

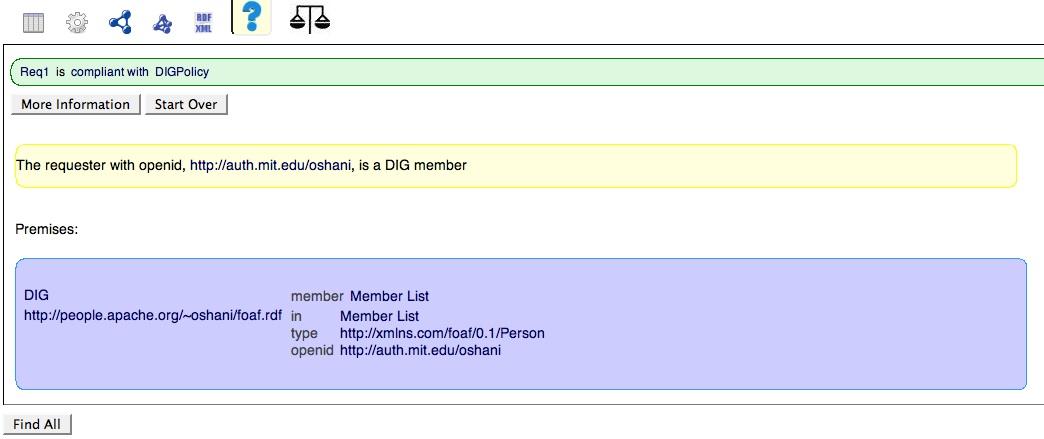

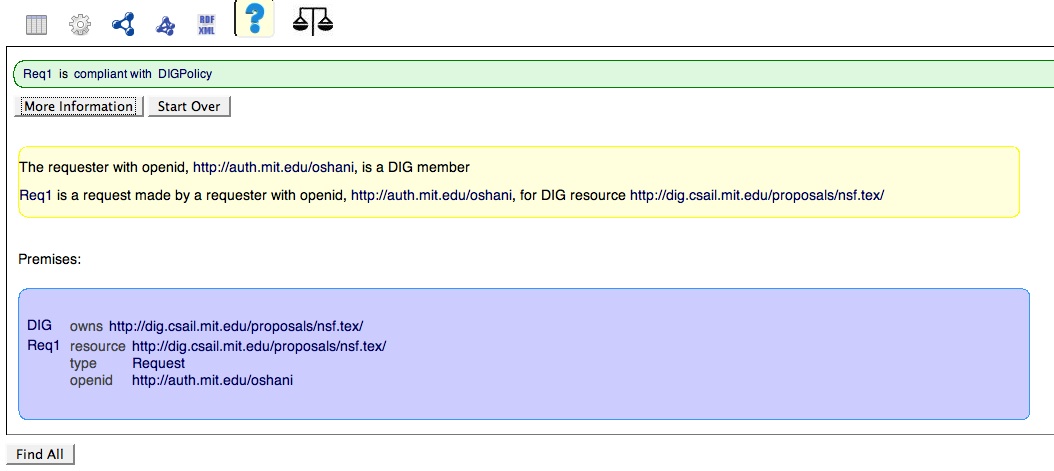

Explanation

Explanation

Generation of Explanations

- While reasoning, the reasoner maintains dependencies of all conclusions

- Rules in dependency sets annotated with NL descriptions

- Result of reasoning provided to justification ui

- Required conclusion identified

- Dependencies of conclusion extracted

More AIR Specifications

- So far we've seen air:Policy, air:variable, air:BeliefRule, air:pattern, air:description, air:assert

- Alternative rule (air:alt)

- It is a rule that becomes active if the pattern of the containing rule fails.

- This alt property is used to assert closure over some set of facts.

- Consider the following example. If the pattern of :RuleB matches, then the assertion fires, otherwise the alternative rule, :RuleC, becomes active.

:RuleB a air:Belief-rule;

air:variable :MEMBER;

air:pattern {

:MEMBER air:in :MEMBERLIST.

};

air:assert { :MEMBER foaf:member :DIG };

air:alt [ air:rule :RuleC ].

:RuleC a air:Belief-rule;

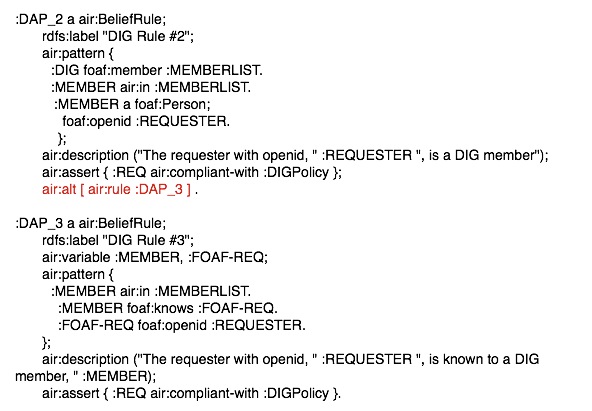

Alternative rules

- Extending original policy

- If the requester is not a DIG group member, then check if the requester is known to any of the DIG members.

- This rule is an alternative to rule, DAP-2, defined earlier

Viewing Policy in UI

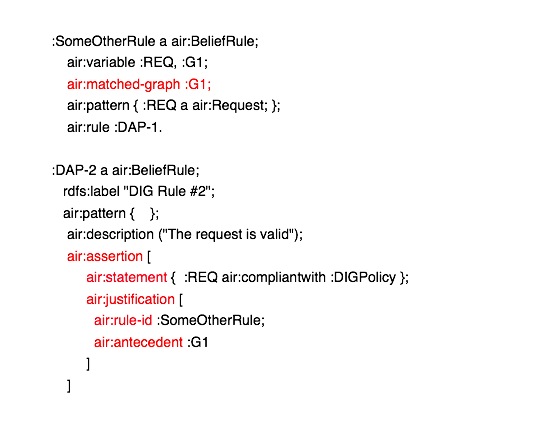

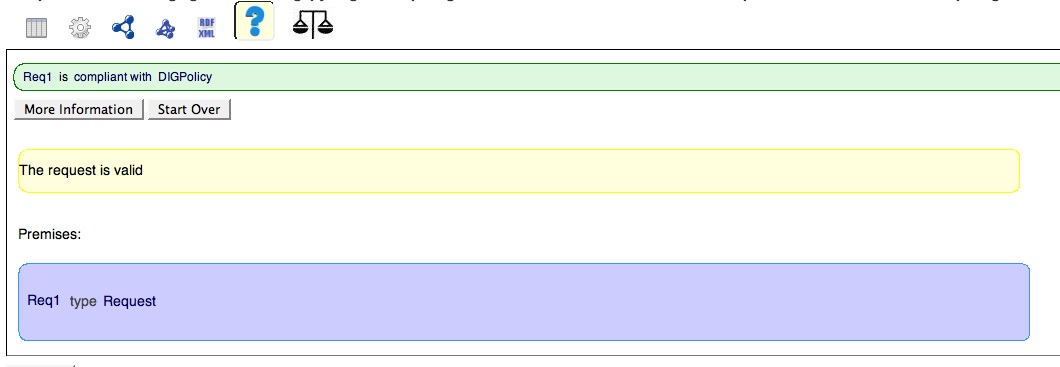

Customizing Explanations

Why

- Using dependency tracking, we are able to generate explanations for every conclusion reached by the reasoner

- Explanation is too lengthy - we might want to reduce the number of steps

- Explanation exposes too much information about the policy - we might want to protect our policy

Customizing Explanations

How

- Hidden rules: Prevents the deduction steps of the rule from

appearing in the explanation

:RuleB a air:Hidden-rule; - Rule descriptions: Lists of variables and strings put together

to form NL descriptions of rules

:DAP-2 a air:BeliefRule; air:description ("The requester with openid, " :REQUESTER ", is a DIG member").

Customizing Explanations

How

- Modify dependencies: use air constructs to change the actual

dependencies of a conclusion

- instead of air:assert, air:assertion property of rules is used

- air:assertion is of type air:Assertion

- air:Assertion class is composed of two components

- air:statement, which is the set of triples being asserted

- air:justification, which is the explicit justification that needs to be associated with the statement. It is of type air:Justification

- air:Justification class consists of two properties

- air:rule-id, which can be set to the name of the rule that the assertion is to be attributed to

- air:antecedent is a list of matched graphs that would act as the premises. It is possible to obtain the matched graphs of rules by using the matched-graph property of Rules with a variable.

Customizing Explanations - Example

|

Original Policy

|

Modifying Dependencies

|

Original Explanation

Customizing Explanations - Example

Future Work

- Unhelpful explanation generated for alternative rule

- Multi-level rule descriptions

- Pattern matching is not scalable

- Some firefox issues with justification UI

Summary

- AIR Policy language

- explanation generation

- dependency tracking

- mechanisms for customized explanation

- AIR tools

- AIR reasoner

- Justification UI

More Information

- AIR specifications: http://dig.csail.mit.edu/TAMI/2007/AIR/

- AIR ontology: http://dig.csail.mit.edu/TAMI/2007/amord/air.ttl

- Demo: http://dig.csail.mit.edu/2008/Talks/0518-SemTech-eplk/code/

- How to use the Justification UI http://dig.csail.mit.edu/TAMI/2008/JustificationUI/howto.html